Vulnerabilities Discovered in AI as a Service Platforms

Recent research has unveiled critical vulnerabilities in AI as a service platform like Hugging Face, potentially exposing millions of private AI models and apps to security threats. According to findings by Wiz researchers Shir Tamari and Sagi Tzadik, these vulnerabilities could empower malicious actors to escalate privileges, gain unauthorized access to other customers’ models, and compromise the continuous integration and continuous deployment (CI/CD) pipelines.

Two-Pronged Threats and Their Implications

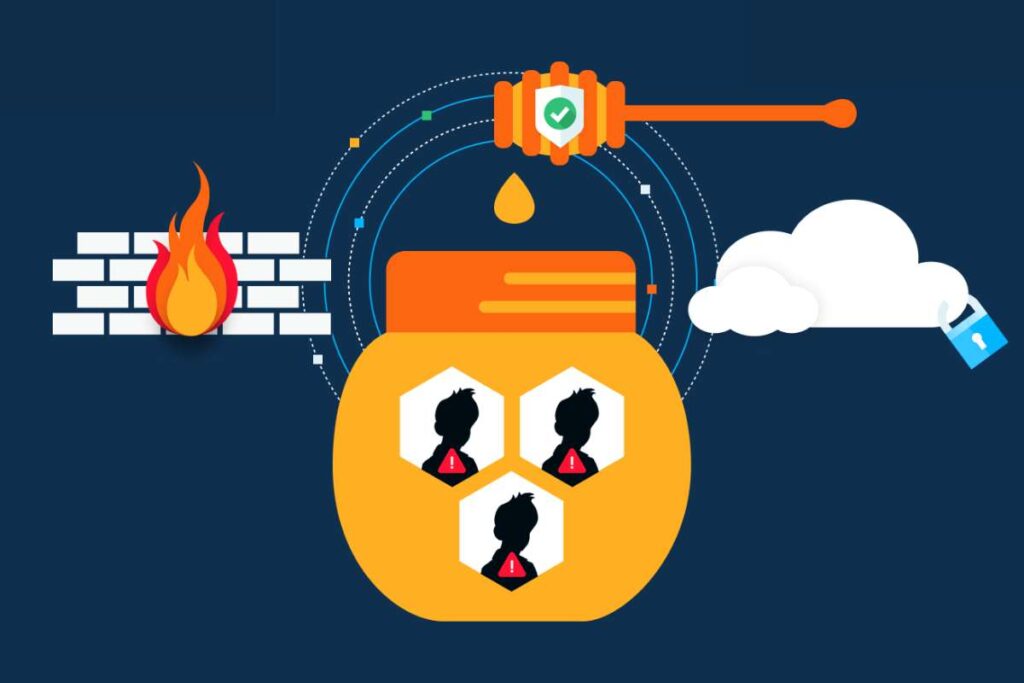

The identified threats manifest in two forms: shared inference infrastructure takeover and shared CI/CD takeover. This means attackers could upload rogue models in pickle format, exploiting shared infrastructure to execute arbitrary code. Such breaches could allow threat actors to infiltrate Hugging Face’s system, potentially compromising the entire service and accessing models belonging to other customers. The research highlights the alarming possibility of lateral movement within clusters, raising concerns about data privacy and security breaches.

Moreover, the study indicates the potential for remote code execution via a specially crafted Dockerfile when running applications on the Hugging Face Spaces service. This vulnerability could enable threat actors to manipulate container registries, compromising the integrity of the service’s internal infrastructure and posing additional security risks.

Mitigation Measures and Industry Responses

In response to the findings, researchers recommend implementing measures such as enabling IMDSv2 with Hop Limit to prevent unauthorized access to sensitive information. Hugging Face, in a coordinated effort, has addressed the identified issues and advises users to exercise caution when utilizing AI models, especially those sourced from untrusted origins. Additionally, Anthropic’s disclosure regarding the risks associated with large language models (LLMs) underscores the need for vigilance in leveraging AI technologies. The company has proposed a method called “many-shot jailbreaking” to address safety concerns inherent in LLMs.

As AI continues to permeate various sectors, ensuring the security and integrity of AI as a service platform becomes paramount. The revelations from these studies serve as a wake-up call for the industry to fortify defenses against evolving cyber threats and adopt stringent security protocols to safeguard sensitive data and prevent unauthorized access.

Furthermore, the disclosure from Lasso Security underscores the broader implications of AI-related vulnerabilities. The possibility of generative AI models distributing malicious code packages highlights the importance of scrutinizing AI outputs and exercising caution when relying on AI technologies for coding solutions. As the threat landscape evolves, industry stakeholders must remain proactive in identifying and mitigating potential risks to ensure the responsible and secure deployment of AI technologies.