(Source – YouTube)

In the world of probability theory and statistics, there’s this really cool thing called the Hidden Markov Model (HMM). It’s a powerful tool that gets used in all kinds of fields, like speech recognition and bioinformatics. Now, the name might sound complicated, but don’t worry! With a little patience and guidance, you can totally get the hang of it. In this article, we’ll dive into what the Hidden Markov Model is all about, how it’s used in different areas, and why it’s so important in today’s data analysis.

Understanding the Hidden Markov Model

The Hidden Markov Model (HMM) is a statistical model used to describe systems where observed outcomes are generated by a process with an underlying “hidden” state. Let’s break down this definition and explore the key concepts in more depth.

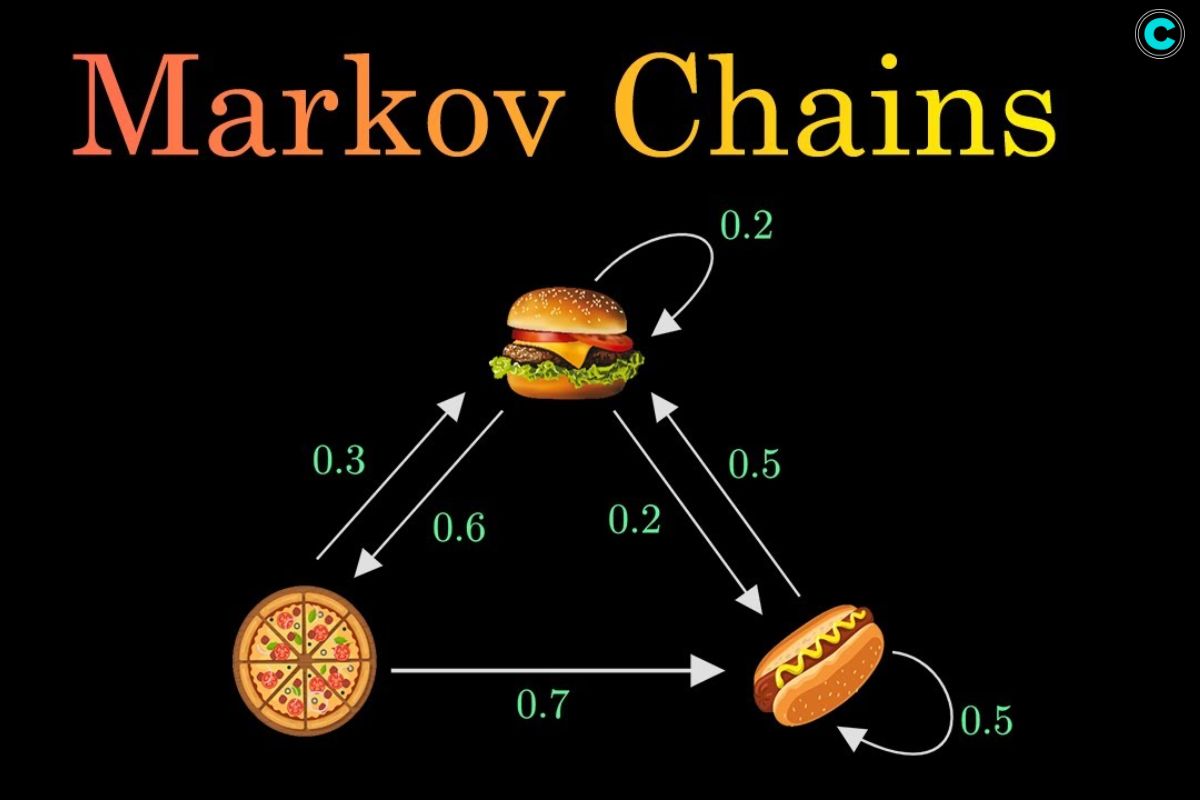

1. Markov Chain

At the core of it lies the Markov chain. A Markov chain is a mathematical system that transitions between different states with fixed probabilities. Each state represents a particular situation or condition, and the transitions between states occur based solely on the current state, not on the sequence of events that led to it.

2. Hidden States

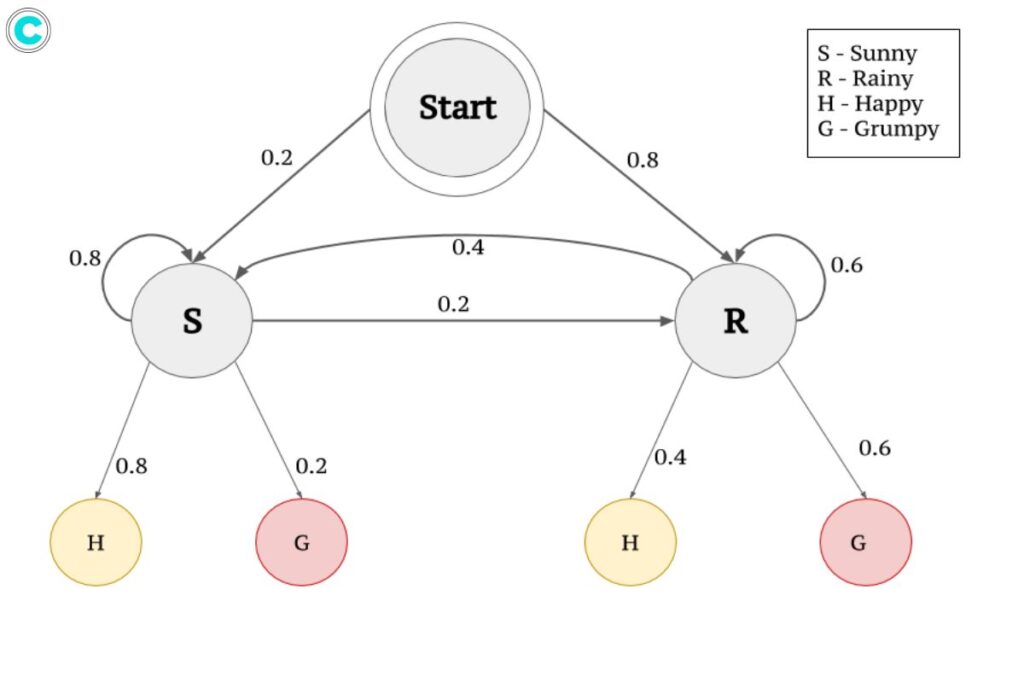

Unlike a standard Markov chain, where states are directly observable, the Hidden Markov Model introduces the concept of hidden states. These states cannot be directly observed but instead influence the observable outcomes, known as emissions or observations. Hidden states represent the underlying factors or conditions that generate the observed data.

3. Observations

Observations are the observable events or data points that are influenced by the underlying hidden states. These observations provide insights into the hidden states, allowing us to infer the sequence of hidden states based on the observed data. The relationship between the hidden states and the observations is probabilistic, meaning that each hidden state has a probability distribution over possible observations.

Example

To illustrate the concept of a Hidden Markov Model, let’s consider a simple example. Suppose we want to model the weather conditions (hidden states) based on the type of activities people engage in (observations). We can define two hidden states: “rainy” and “sunny”. The observations could be “walking”, “shopping”, or “cleaning”. The goal is to infer the hidden states (weather conditions) based on the observed activities.

In this example, the hidden Markov model would represent the process of predicting whether someone will be found walking, shopping, or cleaning on a particular day depending on whether the day is rainy or sunny. By analyzing the observed activities, we can make probabilistic inferences about the hidden weather conditions.

Applications of the Hidden Markov Model

The Hidden Markov Model (HMM) is a versatile statistical model that finds applications in various fields. Let’s explore some of the key applications in more depth:

1. Speech Recognition

HMMs are widely used in speech recognition systems. In this application, HMMs are employed to model spoken language, where the hidden states represent phonemes or linguistic units, and the observations are acoustic features extracted from speech signals. By modeling the underlying sounds or phonemes that generate the speech signal, HMMs enable accurate recognition of spoken words.

2. Bioinformatics

HMMs play a crucial role in biological sequence analysis, particularly in DNA sequence alignment, gene prediction, and protein structure prediction. In this context, hidden states in HMMs may correspond to different structural motifs or functional regions within biological sequences. HMMs are effective in modeling the correlations between adjacent symbols, domains, or events in biological sequences.

3. Financial Markets

HMMs are used in modeling financial time series data. In this application, hidden states represent different market regimes, such as bull markets or bear markets, while observations include price movements and trading volumes. HMMs enable the identification of different market conditions and can be used for forecasting and risk management in finance.

4. Natural Language Processing

HMMs find applications in various natural language processing tasks, including part-of-speech tagging, named entity recognition, and machine translation. HMMs are used to model the probabilistic relationships between words and their syntactic or semantic categories. By leveraging the hidden states in HMMs, these tasks can be performed more accurately and efficiently.

5. Robotics and Control Systems

In robotics, HMMs can be applied to robot localization and mapping. Hidden states in this application represent the robot’s position, while observations are sensor measurements. HMMs enable robots to make decisions based on uncertain information and effectively navigate their environment.

These are just a few examples of the diverse range of applications for Hidden Markov Models. HMMs are also used in areas such as gesture recognition, weather prediction, pattern recognition, and more. The versatility of HMMs lies in their ability to model systems with hidden structures and probabilistic relationships between observations and hidden states.

FAQs

1. What distinguishes the Hidden Markov Model from other statistical models?

The key distinction lies in its incorporation of hidden states, which allows for modeling complex systems where the underlying states are not directly observable.

2. How are the parameters of a Hidden Markov Model estimated?

Parameters, such as transition probabilities and emission probabilities, are typically estimated using algorithms like the Baum-Welch algorithm, which employs the Expectation-Maximization (EM) technique.

3. Can Hidden Markov Models handle continuous data?

Yes, HMMs can handle both discrete and continuous observations. In the case of continuous data, techniques such as Gaussian mixture models are often used to model the emission probabilities.

4. Are Hidden Markov Models sensitive to the choice of initial parameters?

While the choice of initial parameters can impact the convergence of training algorithms, HMMs are generally robust and can yield reliable results with appropriate tuning and sufficient data.

5. How do Hidden Markov Models handle missing data?

Techniques such as the forward-backward algorithm can be employed to compute the likelihood of observed data, even in the presence of missing values, by marginalizing over all possible hidden state sequences.

Conclusion

The Hidden Markov Model serves as a versatile and powerful tool in probabilistic modeling and data analysis, with applications spanning diverse domains. By understanding its fundamental concepts and mechanics, one can leverage the predictive capabilities of HMMs to gain valuable insights from complex datasets. As technology continues to advance, the Hidden Markov Model remains a cornerstone of modern statistical methods, offering a robust framework for understanding and modeling dynamic systems.