(Source-xoriant)

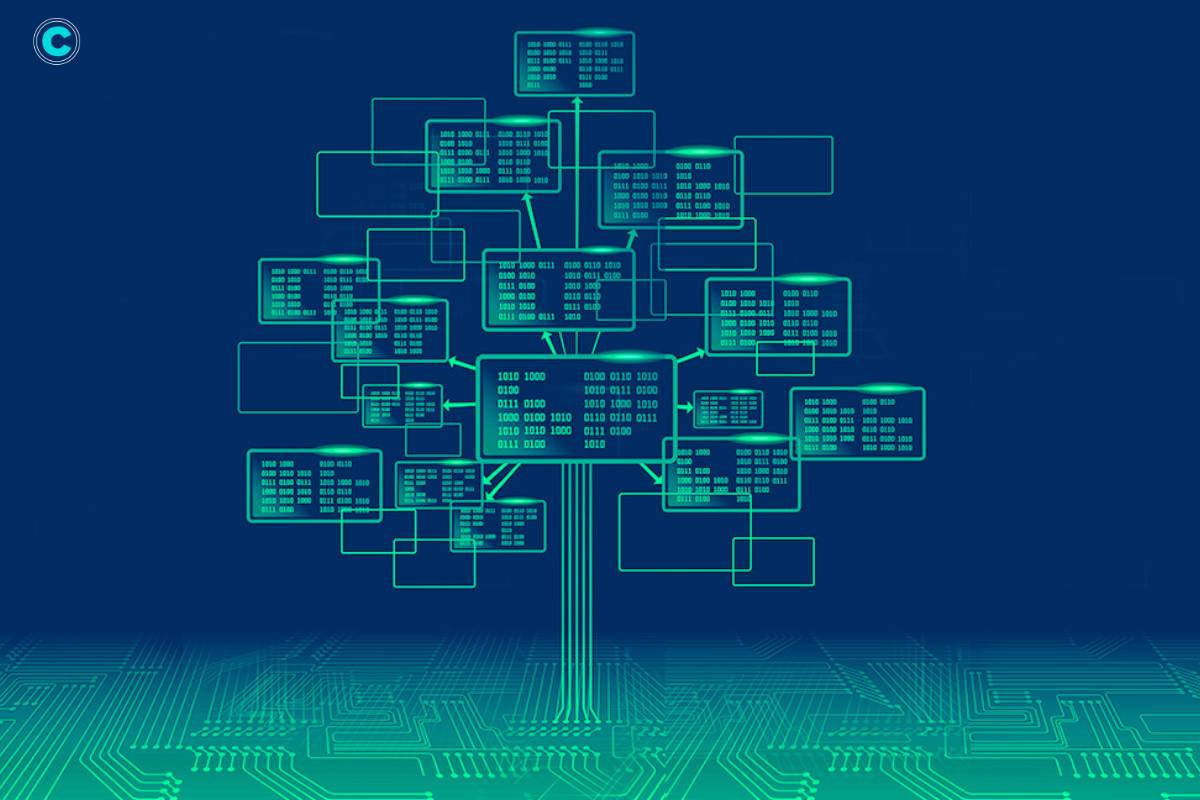

In the realm of machine learning, decision trees stand as fundamental tools for data analysis and predictive modeling. Their intuitive structure and robust capabilities make them a cornerstone in various fields, from finance to healthcare to marketing. In this article, we’ll delve into its essence, exploring its definition, components, applications, and significance in the realm of machine learning.

What is a Decision Tree?

At its core, a decision tree is a graphical representation of possible solutions to a decision based on certain conditions. It resembles an inverted tree where each internal node represents a “decision” based on a particular feature, each branch represents an outcome of that decision, and each leaf node represents a class label or a decision taken after evaluating all the features. In simpler terms, it’s like a flowchart that helps in decision-making.

Components of a Decision Tree:

A decision tree is a hierarchical, tree-like structure that consists of several components. Let’s explore the key components of a decision tree:

1. Root Node:

The root node is the topmost node in a decision tree. It represents the initial decision or feature used to split the data. The root node does not have any incoming branches and serves as the starting point for the decision-making process.

2. Internal Nodes (Decision Nodes):

Internal nodes are the nodes in the middle of the decision tree. They represent decisions based on features. Each internal node evaluates a specific feature and splits the data into subsets based on the feature’s values. These nodes guide the flow of the decision tree and lead to further branching.

3. Branches:

Branches are the arrows or lines connecting nodes in a decision tree. They represent the possible outcomes of a decision. Each branch corresponds to a specific value or condition of the feature being evaluated at an internal node. The branches guide the traversal of the decision tree from the root node to the leaf nodes.

4. Leaf Nodes (Terminal Nodes):

Leaf nodes are the terminal nodes at the end of the branches in a decision tree. They indicate the final decision or classification. Each leaf node represents a specific outcome or class label. The leaf nodes do not split further and provide the final predictions or decisions based on the path followed through the decision tree.

How Decision Trees Work

They work by recursively splitting the dataset into subsets based on the most significant feature at each step. The goal is to create homogeneous subsets that contain instances with similar characteristics. This process continues until the data within each subset is as pure as possible, meaning it contains instances of only one class or category. The decision tree algorithm employs various metrics like Gini impurity or information gain to determine the best feature to split on at each node.

Applications of Decision Trees:

- Classification: They are widely used for classification tasks, such as predicting whether an email is spam or not, classifying diseases based on symptoms, or identifying customer segments for targeted marketing.

- Regression: They can also perform regression tasks, where the target variable is continuous rather than categorical. For example, predicting house prices based on features like size, location, and number of bedrooms.

- Anomaly Detection: They can detect outliers or anomalies in data by identifying instances that deviate significantly from the norm.

- Feature Selection: They can help identify the most important features in a dataset, aiding in feature selection for other machine learning models.

- Decision Support Systems: They are used in decision support systems across various domains, providing a structured framework for decision-making based on available data.

Advantages of Decision Trees:

They offer several advantages that make them a popular choice in machine learning:

1. Interpretability:

They are easy to interpret and understand, making them suitable for both experts and non-experts. Their hierarchical structure allows for clear visualization of the decision-making process, making it easier to see which attributes are most important.

2. No Data Preprocessing:

They can handle both numerical and categorical data without requiring extensive preprocessing. Unlike some other classifiers, they can handle various data types, including discrete or continuous values. Continuous values can be converted into categorical values using thresholds. Additionally, they can handle missing values in the data without the need for imputation techniques.

3. Non-parametric:

They make no assumptions about the underlying distribution of the data, making them flexible and robust. They are considered non-parametric models because they do not rely on specific assumptions about the data distribution. This flexibility allows decision trees to capture complex relationships in the data without being constrained by assumptions.

4. Handles Missing Values:

They can handle missing values in the data without the need for imputation techniques. Unlike some other classifiers, they do not require complete data and can handle missing values directly. This can be advantageous when working with real-world datasets that often contain missing values.

5. Scalability:

Decision tree algorithms can handle large datasets efficiently, making them suitable for big data applications. The cost of using a decision tree for prediction is logarithmic in the number of data points used to train the tree. This scalability makes decision trees a practical choice for analyzing large datasets.

FAQs:

1. How do decision trees handle categorical variables?

They can handle categorical variables by splitting the data based on each category and creating branches for each category in the tree.

2. Can decision trees handle overfitting?

Yes, they are prone to overfitting, especially with deep trees. Techniques like pruning, limiting the maximum depth of the tree, or using ensemble methods like random forests can mitigate overfitting.

3. What is pruning in decision trees?

Pruning is the process of removing parts of the decision tree that do not provide significant predictive power, thereby reducing complexity and improving generalization performance.

4. Are decision trees sensitive to outliers?

They can be sensitive to outliers, especially with algorithms like CART (Classification and Regression Trees). Outliers can lead to biased splits, affecting the overall performance of the tree.

5. Can decision trees handle multicollinearity?

They are not affected by multicollinearity since they evaluate each feature independently at each node. Therefore, multicollinearity among features does not impact the performance of decision trees.

A Guide to Master Machine Learning Pattern Recognition

At its core, machine learning pattern recognition involves the process of training algorithms to identify and interpret patterns within datasets

Conclusion:

They are powerful and versatile tools in the domain of machine learning, offering simplicity, interpretability, and effectiveness in various applications. Understanding their structure, working principles, and applications can empower data scientists and practitioners to leverage their potential for solving complex problems and making informed decisions.