Can you spot a deepfake? A few years ago, you would have said yes. The blurry faces, distorted images, and unnatural eye movements were the dead giveaways. But that has changed a lot. We are living in a period where the difference between reality and untruth is dwindling.

Now, you will never be able to tell a deepfake from a real video. And this has led to an age of AI-powered scams. Identity theft has never been easier! In just a few clicks, you can have videos of world leaders promoting hate or your CEO’s voice clone ordering a wire transfer.

So, how do we fight back? The simple answer is deepfake detection technology. In this article, we shall see what deepfake technology is and how it can help us fight back against this very dangerous technology.

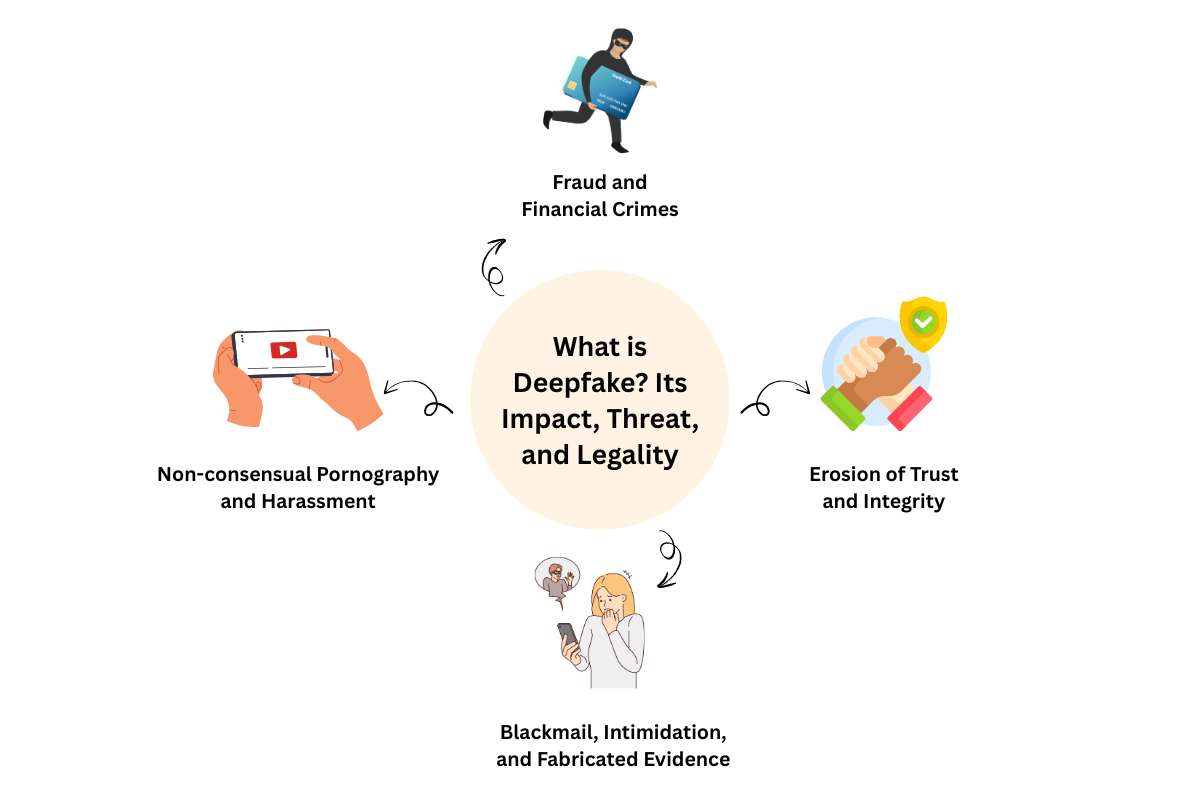

What is Deepfake? Its Impact, Threat, and Legality

Deepfake technology uses AI to create highly realistic images, videos, and audio that can make people appear to say or do things they never did. It first appeared on Reddit in 2017. It relies on deep neural networks to generate these synthetic visuals and sounds.

While deepfakes can be used for creative or educational purposes, such as recreating historical events, parodies, or simulations, they are often misused for fraud, misinformation, and other malicious activities. This dual nature makes deepfake detection technology essential today to protect individuals, organizations, and society from deception and abuse.

Here are some of the Threats of Deepfake Technology:

1. Non-consensual Pornography and Harassment

According to Security Hero, Deepfake porn makes up 98% of all deepfake videos online, with 99% targeting women. Videos can be created in under a minute, posing serious privacy and reputational risks.

2. Fraud and Financial Crimes

In North America, deepfake fraud surged 1,740% between 2022 and 2023, with financial losses exceeding $200 million in the Q1 of 2025 alone. Scammers use deepfakes to impersonate people for money transfers or sensitive information.

3. Erosion of Trust and Integrity

Deepfakes blur the line between real and fake content, threatening journalism, legal evidence, and democracy by undermining trust in digital media.

4. Blackmail, Intimidation, and Fabricated Evidence

Criminals use deepfakes to create fake incriminating videos or audio for blackmail, intimidation, or manipulation of legal and investigatory processes.

Legality of Deepfakes and Why We Need Deepfake Detection Technology?

There are no universal laws for deepfakes, and regulations vary by country. Defining what qualifies as a deepfake remains unclear, leaving manipulations in a grey area. Technology evolves faster than laws, making updates difficult. The borderless nature of the internet complicates enforcement across jurisdictions, while anonymity makes it hard to trace and hold creators accountable..

So, how do we fight this? Well, one way is deepfake detection tools.

What is Deepfake Detection Technology?

Deepfake detection refers to the tools used to identify and distinguish between authentic media (images, videos, and audio) and AI-generated content. It is one of the only defenses we have against deepfakes.

Earlier, it was easy to detect deepfakes, but with the advancement in technology, it has become really hard. It is nearly impossible for a human eye to detect AI-generated content. If that wasn’t enough, AI can also simulate human emotions, accents, and natural tone. And that just makes AI content worse than it already is.

And that is why we use deepfake detection technology to find the difference between AI content and real content. As of 2025, it is the best line of defense against digital crimes.

Who Invented Deepfake Detection Tools?

The invention of deepfake detection tools is credited to multiple research teams and individuals.

For example, Wael AbdAlmageed at the Visual Intelligence and Multimedia Analytics Laboratory (VIMAL) developed two generations of deepfake detectors using convolutional neural networks. Their detectors used methods such as spotting spatio-temporal inconsistencies. It would analyze low-level image frequencies and create compact representations that distinguish authentic from fake faces.

Another important contribution comes from Professor Dinesh Manocha and Ph.D. students Trisha Mittal, Uttaran Bhattacharya, and Rohan Chandra. They invented a deepfake detection technology that analyzes both audio and video affective cues (e.g., eye dilation, facial expressions, speech tone, and pace).

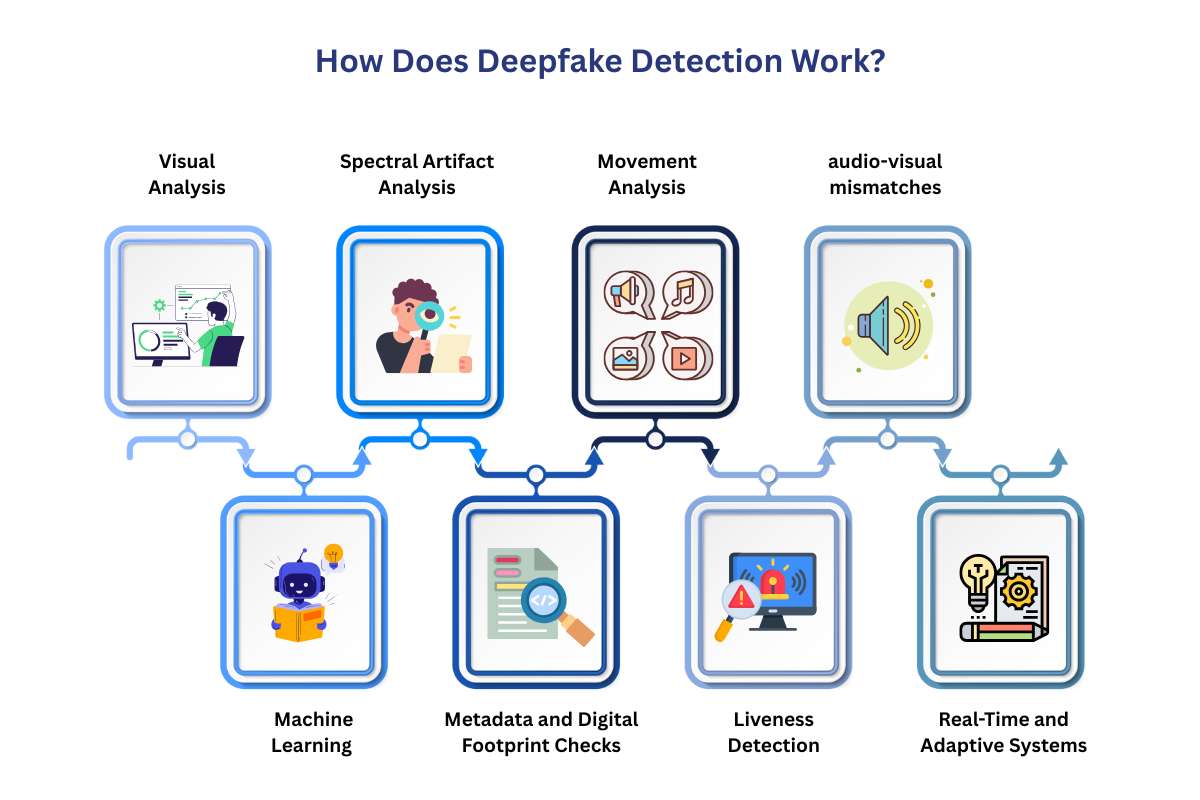

How Does Deepfake Detection Work?

Deepfake detection technology uses AI and machine learning to detect subtle inconsistencies and abnormalities in digital media.

The key mechanisms behind detection technology are:

1. Visual Analysis:

Detects anomalies in facial features, skin texture, blinking, lip movements, lighting, and shadows that don’t align naturally.

2. Spectral Artifact Analysis:

Identifies repeated patterns and unnatural frequency artifacts in video and audio typical of AI-generated deepfakes.

3. Behavioral and Movement Analysis:

Assesses irregular head movements, unnatural expressions, and audio-visual mismatches to ensure natural behavior.

4. Audio Analysis:

Examines voice timbre, speech patterns, and lip-sync to detect synthetic voice manipulation.

5. Machine Learning Models:

Examines voice timbre, speech patterns, and lip-sync to detect synthetic voice manipulation.

6. Metadata and Digital Footprint Checks:

Analyzes file metadata and manipulation traces left by deepfake tools.

7. Liveness Detection:

Confirms real human presence via 3D facial geometry and user interactions like blinking and head turns.

8. Real-Time and Adaptive Systems:

Operates in real-time, updating algorithms to detect new deepfake methods and provide instant authenticity scoring.

What are the Leading Methods Used in Deepfake Detection Technology?

Leading deepfake detection tools use different AI, forensic, and analytic techniques. The most prominent approaches include:

1. Convolutional Neural Networks (CNNs):

Deep learning models trained on large datasets recognizing real from fake images/videos. It does this by detecting subtle pixel-level abnormalities, artifacts, and inconsistencies in facial features.

2. Temporal and Spatio-temporal Analysis:

Examines inconsistencies over time in videos to detect deepfakes. This includes unnatural blinking, irregular head movement, or mismatched mouth movements during speech.

3. Frequency Domain Analysis:

Identifies digital artifacts invisible in pixel space but present in frequency domain data caused by deepfake generation algorithms.

4. Multimodal Detection:

Combines audio and video analysis to detect inconsistencies between voice and lip movements. It also identifies synthetic alterations in either modality.

5. Biometric Feature Analysis:

Detects abnormal biometric signals such as unnatural eye movement and facial microexpressions. It also notices physiological features like pulse or eye dilation, which are altered in deepfakes.

6. Digital Forensics:

This method investigates file metadata, compression artifacts, and inconsistencies in lighting or reflections. It basically traces any flaw left by editing software.

7. Liveness Detection:

Verify user presence in real-time video streams by requiring interactive inputs. It analyzes the 3D face geometry to rule out synthesized or static images.

8. Attention-based Neural Networks:

Uses attention mechanisms within neural networks to focus on critical facial regions or frame sequences most indicative of manipulation.

9. Explainable AI (XAI) Models:

Techniques that not only detect deepfakes but also provide interpretable visualizations and explanations for detected anomalies.

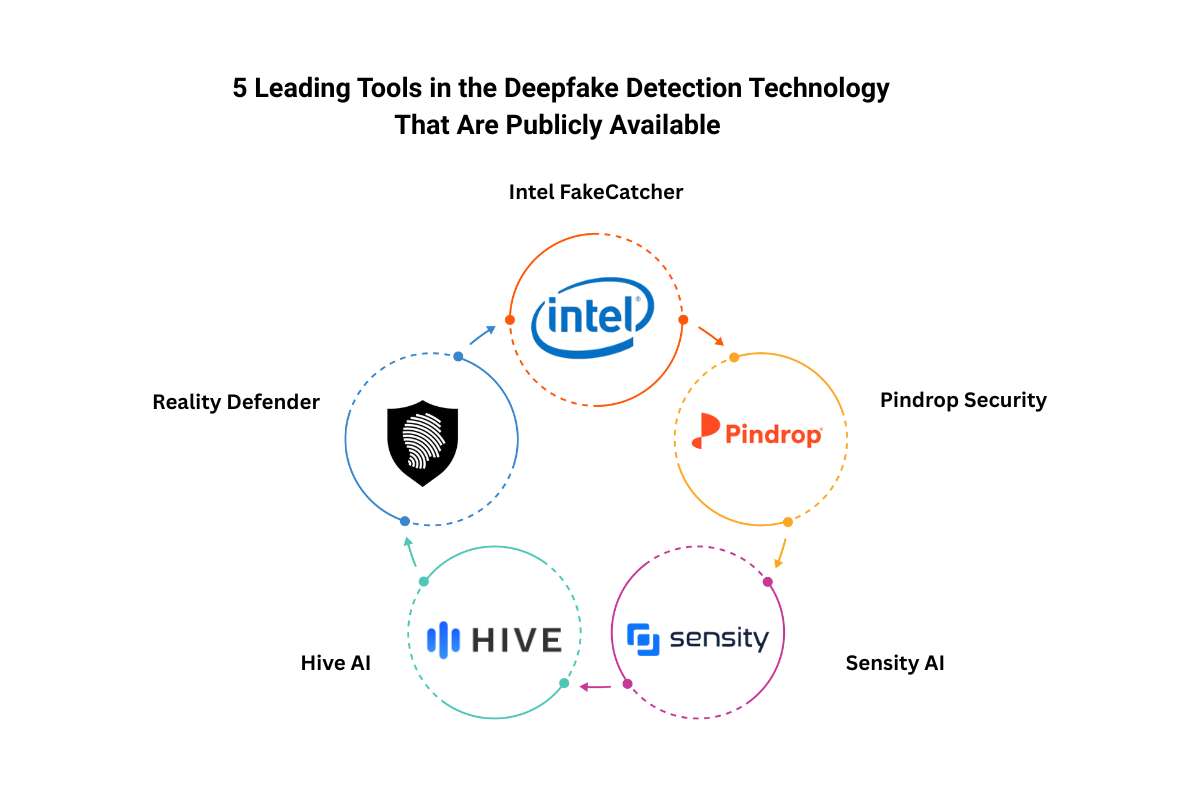

5 Leading Tools in the Deepfake Detection Technology That Are Publicly Available

Here is a list of 5 deepfake detection tools available publicly. You can use them to check whether the videos, audios, and pictures you have are real or not.

1. Intel FakeCatcher:

This tool stands out for its unique, real-time approach. Instead of just looking for AI artifacts, FakeCatcher analyzes subtle biological signals, such as blood flow in the face, which are difficult for an AI to replicate. It’s a powerful tool for live video authentication. Intel FakeCatcher is known for its high accuracy in real-world scenarios.

2. Pindrop Security:

While many tools focus on video and images, Pindrop specializes in audio deepfake detection. With a reported 99% accuracy in detecting synthetic speech, it is an important tool for financial institutions and call centers to combat voice-based fraud and protect against scams.

3. Sensity AI:

Considered a market leader, Sensity offers a broad, multimodal platform that detects deepfakes across videos, images, audio, and even AI-generated text. It boasts high accuracy, which is often cited at 98%. It is trusted by governments and large corporations for its ability to monitor thousands of sources in real-time. Sensity can provide a detailed, forensic-level analysis of every deepfake.

4. Hive AI:

Hive is a major player in content moderation in the industry. It provides a robust deepfake detection API used by platforms and governments, including the U.S. Department of Defense. Its strength lies in its ability to quickly and accurately classify vast amounts of content at scale. This deepfake detection technology is ideal for social media and other platforms with high content volume.

5. Reality Defender:

This company has gained a lot of traction for its multi-format detection and enterprise-focused platform. It provides a real-time dashboard and API for businesses to integrate deepfake detection directly into their existing security and content moderation workflows. Its use of “probabilistic detection” helps it identify even subtle, new types of deepfakes.

Understanding the Real World Applications of Deepfake Detection Technology

Deepfake detection tools help us on various fronts. They help protect individuals from financial fraud, identity theft, blackmail, and all sorts of cybercrimes.

Here are the key real world applications of deepfake detection technology:

| Use Case | Application |

| Identity Fraud Prevention | Banks use it in onboarding and authentication to block synthetic fraud. |

| Content Moderation | Social platforms (e.g., TikTok, Meta) flag and remove deepfake content. |

| User Verification & Onboarding | Gig platforms and passwordless logins verify users to stop fake accounts. |

| Brand & Likeness Protection | Entertainment firms protect brands and celebrities from fake endorsements. |

| Forensics & Evidence Validation | Agencies validate digital evidence and detect manipulation in investigations. |

| Impersonation & Fraud Detection | Tools like Reality Defender secure calls, customer service, and executive comms. |

| HR & Workforce Identity Checks | Recruiters verify applicant media to prevent impersonation in hiring. |

| Corporate Safeguarding | Recruiters verify applicant media to prevent impersonation in hiring. |

These are the main ways deepfake detection tools help us. But that’s not all, just like every other technology, deepfake detection tools have some limitations of their own.

Limitations of Deepfake Detection Technology

The “Arms Race” Dynamic is the most fundamental limitation of this technology. Deepfake generators and deepfake detectors are continually changing in a cycle. As detectors get better at finding subtle artifacts, deepfake creators develop new techniques to eliminate those artifacts. This means that a detection model trained on today’s deepfakes might be ineffective against the more sophisticated deepfakes of tomorrow.

The second issue is that many deepfake detection technology models are highly effective on the specific datasets they were trained on. But they perform poorly when confronted with deepfakes created using a different technique or in a new, unseen context. This is a major hurdle for real-world applications, where deepfakes are “in the wild” and not limited to a specific dataset.

And the biggest roadblock in the tech is that deepfake detection, especially in real-time, is computationally exhaustive. Examining video frames, checking for inconsistency, and processing audio all require significant processing power. And this acts as a barrier for widespread, large-scale deployment, such as on social media platforms.

Future of Deepfake Detection Tools

The future of AI is very exciting, but obstacles like deepfakes will arise. Such tools will inevitably rise as technology advances. So, what do we do? Do we try to limit deepfakes? Do we try to stifle technological growth? No, instead, we should focus on upgrading AI detection tools to match the needs.

The first thing the industry needs to understand is that this “arms race” dynamic is not working. We need to move beyond finding the current way of detection. Instead, the next generation of solutions should focus on establishing a digital chain of custody, verifying the authenticity of content from its source.

The next generation of deepfake detection technology must integrate cryptographic methods and blockchain to create a tamper-proof record of every media. The future deepfake detection will integrate multimodal and behavioral analysis to create a more rounded defense.

Conclusion:

We live in an era of digital deception; the ability to tell what is real from what is fake is a matter of trust. As deepfakes become increasingly indistinguishable from reality, they threaten to erode confidence in everything. It is a threat to everything, from our personal relationships to our democratic institutions. The battle against this rising tide of artificial media needs more than just reactive fixes.

It demands an all-around plan that combines cutting-edge deepfake detection technology with a fundamental shift in how we approach digital authenticity. By using a combination of digital forensics, behavioral analysis, and proactive source verification, we can begin to rebuild the trust that has been lost.

The future of fighting deepfakes hinges on our ability to stay one step ahead of the deepfake creators. While the technology is advancing speedily, so are the malicious people who weaponize it. The most effective defense won’t just flag a deepfake after it’s been created. Instead, it will validate the integrity of content before it ever has a chance to spread.

This calls for a collaborative effort among tech companies, governments, and the public to implement robust standards and deepfake detection technology. At the end of the day, our success won’t be measured by the number of fakes we detect, but by our ability to create a digital ecosystem where authenticity is the rule, not the exception.

FAQs

Can’t a person just spot a deepfake on their own?

While older, low-quality deepfakes were easier to identify, modern deepfake technology has become incredibly sophisticated. The most advanced deepfakes are nearly impossible for a human to distinguish from reality. This is why deepfake detection tools are becoming a necessary line of defense.

Which companies are leading the way in deepfake detection technology?

A number of companies are at the forefront of this technology, often developing solutions for enterprise-level clients, social media platforms, and government agencies. Some of the notable players include Intel, Microsoft, and Sensity AI.

Will deepfake detection technology ever be perfect?

The consensus among experts is that a perfect, 100% reliable deepfake detection system is unlikely. This is because, as soon as a new detection method is developed, deepfake creators find a way to bypass it. So it is better to focus on making the creation of deepfakes so difficult, time-consuming, and expensive that malicious use is reduced.

Also Read: AI Deepfake of Senator Marco Rubio Triggers Global Cybersecurity Alarm