The Urgent Call for AI Security

As the AI landscape continues to rapidly evolve, developers and data scientists find themselves caught in a whirlwind of innovation and progress. Amidst the rush to create and deploy AI products, experts are sounding the alarm on the critical need for security. While the excitement of exploring new models, algorithms, and tools is palpable, the focus on security cannot afford to waver.

The development of machine-learning technology often falls into the hands of scientists rather than engineers. While these individuals excel in areas like neural network architectures and training techniques, security might not be their forte. As AI projects come together, they resemble traditional software development, with various components glued together to perform tasks. However, the use of code components from public repositories can introduce hidden vulnerabilities and expose systems to supply-chain attacks.

The Invisible Threat: Supply-Chain Attacks in AI

In the realm of AI, the specter of supply-chain attacks looms large. Just like in traditional software development, AI projects are vulnerable to malicious packages and tainted datasets. The repercussions of such attacks extend beyond compromising developers’ workstations; they can infiltrate corporate networks and lead to erroneous behavior in applications. The rise of cybersecurity and AI startups underscores the gravity of this threat, with a growing emphasis on auditing and testing machine-learning projects for security vulnerabilities.

The AI supply chain presents multiple entry points for malicious actors, who exploit tactics like typosquatting to deceive developers. These attacks can result in the theft of sensitive data, hijacking of servers, and the introduction of malware into deployed applications. The significance of securing the AI supply chain cannot be overstated, as highlighted by recent incidents exposing vulnerabilities in widely used platforms.

Navigating the Complex Terrain of AI Security

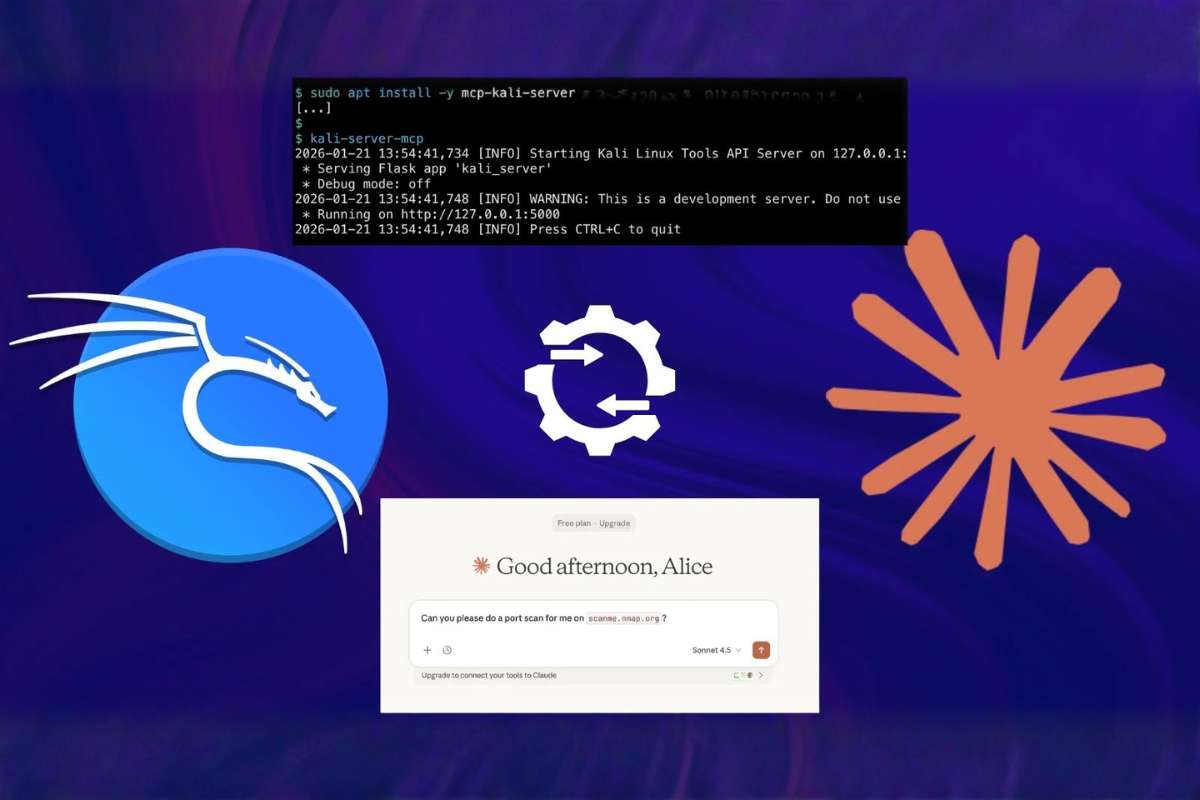

Addressing security challenges in the AI domain requires a concerted effort from both industry players and developers. Startups specializing in AI security are emerging, but established organizations must also prioritize security measures. Implementing supply-chain security practices, such as digital authentication for code contributions, is crucial to mitigating the risk of malicious alterations. Additionally, developers must conduct thorough security assessments and penetration tests before deploying AI solutions.

Despite the growing awareness of AI security risks, the pace of innovation poses a formidable challenge. With countless tools and models being developed and released, ensuring comprehensive security measures remains an ongoing endeavor. As the AI landscape continues to evolve, stakeholders must remain vigilant and proactive in safeguarding against supply-chain threats to ensure the integrity and safety of AI systems.